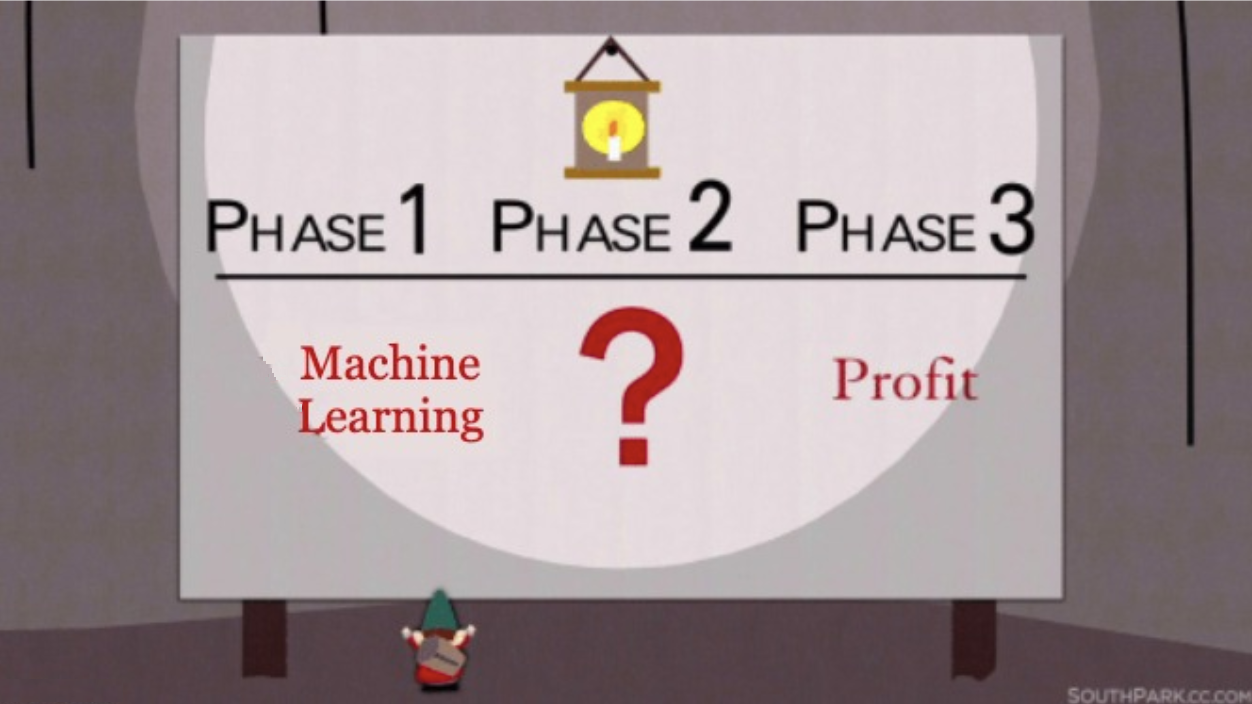

This is a true story of how I lost money using machine learning (ML) to bet on Counter-Strike: Global Offensive (CS:GO). The project was done with a friend, who gave me permission to share this story in public. We worked on it together in 2019, but the lessons have stuck with me and I want to share them with the world.

Just to make sure I got the numbers right, as this was a long time ago, I retrieved my bets from the betting website to plot my cumulative returns:

As you can see, I lost a lot of money very quickly, then my losses plateaued until I grew bored and decided to cut my losses. My friend persisted and he ended up making a 7.5% return on investment (ROI)! We did have an edge after all and I squandered it. This and the next post are a lesson on both how to bet with ML and how to avoid my mistakes. The lessons should apply more broadly than just for e-sports betting, as the concepts to be discussed can be applied to other financial domains like credit, fraud and marketing.

First, in this post, I go over the foundations needed to understand how to make financial decisions with ML:

- What is your edge?

- Financial decision-making with ML

- One bet: Expected profits and decision rule

- Multiple bets: The Kelly criterion

- Probability calibration

- Winner’s curse

Second, in this post, I go over our actual solution:

- CS:GO basics

- Data scraping

- Feature engineering

- TrueSkill

- Inferential vs predictive models

- Dataset

- Modelling

- Evaluation

- Backtesting

- Why I lost 1000 euros

Foundations

What is your edge?

If you’re playing a poker game and you look around the table and you can’t tell who the sucker is, it’s you.

If you want to make money by betting or trading, you need to have an edge. While the efficient market hypothesis is a good first approximation and suggests edges shouldn’t exist, clearly there are situations where you can exploit market inefficiencies for monetary gains. That is your edge. That is how funds like Renaissance Technologies make money over decades. For a clear first-person narrative on finding edges and beating casinos and markets, read Ed Thorp’s biography, who proved card counting could be profitable and pioneered quantitative finance. More on him later.

According to Agustin Lebron in the Laws of Trading, an edge is defined as “the set of reasons, the explanation, why you think a given trade has a positive expected value. In other words, why does that trade make money on average?”. More importantly, he highlights that if you can’t explain your edge in 5 minutes, you probably don’t have a very good one.

Watch out for anecdotal evidence of anyone beating the market, as it could solely be due to survivorship bias. Edges are not sold on the public market, so be skeptical of anyone trying to sell you a way to make money. Whenever an edge becomes public, it’s not an edge anymore.

That is why you should read about failure stories, like mine, as they carry important lessons not contaminated by bad incentives or biases. Nassim Taleb, for example, recommends the book What I Learned Losing a Million Dollars as “one of the rare noncharlatanic books in finance”.

Our edge, or so we thought, was to use the power of tabular ML to beat the bookmakers. We had worked previously with credit, fraud, and insurance, and we believed our skill set in those areas would translate to an advantage here. While that’s a flimsy argument at face value, the backtests we ran indicated we did have an edge indeed (more of that in the next post). We chose CS:GO in particular as it had enough match data available in public that we could scrape and leverage for our models. Plus, we thought it’d be a much more incipient market compared to horse racing or football betting.

Financial decision-making with ML

How can you use ML for sports betting? That has a surprisingly long history: Decades ago, logistic regression was being used for horse racing betting and made some people rich. Essentially, it’s not different from using ML in any other financial decision-making application, such as giving loans or stopping fraudulent transactions.

Let’s work backward from a simple profits equation. Profits can be defined as revenue minus costs1 and as a function of actions a. The actions a you take are betting, providing credit, and blocking transactions. You can simply write it as:

\[ \text{Profits}(a) = \sum \text{Revenue}(a) - \sum \text{Cost}(a) \]

While simple and naive, defining the revenue and losses for your product or business can be an illuminating exercise. I’d claim that is what differentiates “Kagglers” from money-making data scientists. For more on this topic, read my older post The Hierarchy of Machine Learning Needs. Let’s define action, revenue and cost for the aforementioned examples:

- Betting:

- Action: Bet size (between 0 and the limit offered by the betting house)

- Expected revenue: Bet size \(\cdot\) Odds \(\cdot\) Probability of winning

- Cost: Bet size

- Loans

- Action: Loan amount (between 0 and some limit imposed by the bank)

- Revenue: Interest rate \(\cdot\) loan amount

- Expected cost: Probability of default \(\cdot\) loan amount

- Fraud

- Action: Blocking the transaction or not (binary)

- Revenue: Transaction amount \(\cdot\) Margins

- Expected cost: Transaction amount \(\cdot\) Probability of fraud

ML can help by filling in the blanks (in bold): ML models can predict the probability of winning a bet, defaulting on a loan, or transaction fraud. With such probabilities and the other fixed quantities known (like the interest rate), you can choose an action a that maximizes the profits. Let’s work that out in the case of betting.

One bet: Expected profits and decision rule

Say you have a match between two teams A and B. Assume that the probability of A beating B is P. If you place a bet of \$10 at odds of 2, you will net \$20 if A wins and lose \$10 if A loses. The expected value of the bet on A is:

\[\begin{align} E[Profits(bet=10)] &= E[Revenue(bet=10)] - E[Cost(bet=10)] \\ &= 2 \cdot 10 \cdot P - 10 \cdot (1 - P) \\ &= 30 \cdot P - 10 \end{align}\]

This leads to the following decision rule: if \(P > 1/3\), you should bet on A. If \(P < 1/3\), you should bet on B. If \(P = 1/3\), you should not bet at all. Also, this implies that, if the market is efficient, then betting odds of 2 implies the probability of winning to be 1/3.

Now, let’s make it broader and consider betting odds on A to be \(O_A\) and that the probability of A beating B is \(P(X_A, X_B)\), where \(X\) are the features of each team (say, their latest result):

\[\begin{align} E[Profits(bet)] &= E[Revenue(bet)] - E[Loss(bet)] \\ &= O_A \cdot bet \cdot P(X_A, X_B) - bet \cdot (1 - P(X_A, X_B)) \end{align}\]

Betting decision rule

Based on the last equation, you should bet on A if \(P(X_A, X_B) > \frac{1}{O_A+1}\), where \(\frac{1}{O_A+1}\) is the implied probability of A winning given by the betting odds. Conversely, you can consider the same for betting on B given the odds \(O_B\). That is a strategy that provides you with positive expected profits for each bet. Excluding ties, the implied probability of A beating B and B beating A usually sum up to more than 1: that is the bookmaker’s margin, a major way they make money, so always evaluate betting on A and on B independently.

In practice, a bet on A should only be made if \(P(X_A, X_B) > \frac{1}{O+1} + \delta\)2. The reason for the \(\delta\) is that you need to account for the model prediction error and the frictions in the betting process. You can find out the optimal value of \(\delta\) by backtesting your model under different values of \(\delta\) and choosing the one that maximizes your profits (ROI), which I will do in the next post.

One bet is not usually made in isolation, as there are other potential bets you can make in the present and future. The question then becomes not just about betting or not, but rather how much to bet on each match. For simplicity, I will assume bets are made sequentially and on a limited bankroll. How do you maximize the expected value of all your future bets?

Multiple bets: The Kelly criterion

A typical answer is the Kelly criterion. That is a formula used to determine the optimal size of a series of bets, developed by John Kelly in 1956. It aims to maximize the expected value of the logarithm of your wealth (bankroll)3. I will not bore you with its derivation here, but the end result is a simple formula that provides the fraction of your bankroll to wager for each bet given the odds and the probability of winning:

\[ \text{Fraction} = \frac{O \cdot P(X_A, X_B) - (1 - P(X_A, X_B))}{O} \]

Let’s go back to the first example and assume \(P(X_A, X_B)\) to be 0.5, that is, even probability of A beating B (which makes it a profitable bet in expectation as odds of 2 imply only 1/3 chance of A beating B). The optimal fraction of the bankroll to wager would be:

\[ \text{Fraction} = \frac{2 \cdot 0.5 - 0.5}{2} = 0.25 \]

That is, you should bet 25% of your bankroll! That sounds suspiciously large, given that you only have a 50% chance of winning.

Unfortunately, the Kelly criterion is not reliable for real betting/trading as it assumes that the probabilities are known and there is no uncertainty in them, almost never the case4. That makes it way too aggressive and allows for too much variance in the bankroll. The best way to visualize that is by looking at the relationship between the bankroll growth rate and the fraction of bankroll wagered:

Code

# Parameters from the image

p = 0.5

q = 1.0-p

b = 2

a = 1

# Growth rate function

def growth_rate(f, p, q, b, a):

return p * np.log(1 + b*f) + q * np.log(1 - a*f)

# Generate data points for plotting

f_values = np.linspace(0, 0.75, 500) # Wagered fraction values from 0% to 50%

r_values = growth_rate(f_values, p, q, b, a)

# Using plotly express to create the base plot

fig = px.line(x=f_values, y=r_values, labels={'x': 'Wagered fraction', 'y': 'Growth rate'},

title="Growth rate as a function of wagered fraction")

# Formatting axes to show percentages

fig.update_layout(

width=600,

height=600,

xaxis_tickformat=",.0%", # x-axis percentages

yaxis_tickformat=",.1%" # y-axis percentages

)

# Adding the vertical line

fig.add_shape(

go.layout.Shape(

type="line",

x0=0.25,

y0=0,

x1=0.25,

y1=0.059,

line=dict(color="Red", dash="dot")

)

)

# Annotations

fig.add_annotation(

go.layout.Annotation(

text='Optimum "Kelly" bet',

xref="x",

yref="y",

x=0.25,

y=0.059,

showarrow=True,

arrowhead=4,

ax=60,

ay=-40

)

)

# Adding parameters as a footnote

param_str = f"Parameters: P_winning={p}, Odds={b}"

fig.add_annotation(

go.layout.Annotation(

text=param_str,

xref="paper",

yref="paper",

x=0,

y=-0.15,

showarrow=False,

align="left",

font=dict(size=10)

)

)

fig.show()Note the asymmetry: if you bet too little, you will not grow your bankroll fast enough. But if you bet too much, you will lose your bankroll very quickly. The Kelly criterion is the point where the growth rate is maximized, but the curve is flat around that point, which means that you can bet less and still get a high growth rate without the risk associated with the “optimal betting point”.

This is more general than it seems: when I worked in performance marketing, the curve between profits and marketing spend was surprisingly similar. A former colleague writes about it here: Forecasting Customer Lifetime Value - Why Uncertainty Matters.

How much should you actually bet, then? A simple heuristic is simply always betting a fixed value, no matter what. That is what I’d suggest when starting and figuring out whether you have an edge and other elements of your strategy. Another suggestion is using the half-Kelly, that is, half of what the Kelly criterion implies. That forms a good compromise between the two extremes, but should only be used when you can trust both your model and betting strategy.

Probability calibration

Some of the assumptions above are not realistic in real life. The probability \(P(X_A, X_B)\) is never exactly known, even if it can be predicted by an ML model trained on historical data (which is the major theme of the second post in this series). But even if you have a good model for that, it doesn’t mean that the probability is calibrated. Also, it assumes the data-generating process to be stationary5, another further complication discussed in the second post.

Before we go on further, we need to actually define what probability calibration means: if the model predicts probability of \(X\), it should be correct \(X%\) of the times. This can be better visualized with a calibration plot, where the dotted blue line represents a perfectly calibrated model and the black line represents the uncalibrated predictions of a fake model:

Code

# Data from the image

mean_predicted_values = [0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

fraction_of_positives = [.05, 0.1, 0.2, .35, 0.5, .65, 0.8, 0.9, 0.95]

# Create a scatter plot for the model's calibration

fig = go.Figure()

# Add the perfectly calibrated line

fig.add_trace(go.Scatter(

x=[0, 1], y=[0, 1],

mode='lines', name='Perfectly calibrated',

line=dict(dash='dot')

))

# Add the model's calibration curve

fig.add_trace(go.Scatter(

x=mean_predicted_values, y=fraction_of_positives,

mode='lines+markers', name='Actual model',

line=dict(color='black')

))

# Set layout properties

fig.update_layout(

title="Calibration plot",

xaxis_title="Mean predicted value",

yaxis_title="Fraction of positives",

xaxis=dict(tickvals=[i/10 for i in range(11)], range=[0, 1]),

yaxis=dict(tickvals=[i/10 for i in range(11)], range=[0, 1]),

showlegend=True

)

fig.show()Note that calibration is not enough: if your model predicts 50% for a coin flip, it’s perfectly calibrated but also totally useless!

How do you calibrate a model? If your loss function is a proper scoring rule, like the negative log-likelihood (NLL), it should come calibrated by default6. But if your loss doesn’t lead to calibration or if empirically you see calibration issues, you can always use a calibration method:

- Platt scaling: logistic regression on the output of the model, usually applied to smooth predictors like neural networks

- Isotonic regression: non-parametric method that fits a piecewise-constant non-decreasing function to the data, usually applied to tree-based models like XGBoost

Measuring calibration is tricky as there is a trade-off between calibration and discrimination. As the coin flip example shows, a dumb model can be calibrated, while a model with amazing discrimination powers could be uncalibrated. Therefore, you need to look at both calibration and discrimination (accuracy, AUC, precision-recall, etc) separately. With respect to the calibration part alone, I suggest two assessments:

- Calibration plot (like shown above): visual inspection always helps make sense of the data

- Brier score / negative log-likelihood: they are proper scoring rules and they balance calibration and discrimination, so the smaller the better in both senses (0 is perfect but usually not achievable)

If you want to automate the visual inspection in (1), you can use the expected calibration error (ECE), which is the average difference between the accuracy and the confidence of the predictions. The smaller the ECE, the better the calibration. For more on this topic, read On Calibration of Modern Neural Networks.

Winner’s curse

If it sounds too good to be true, it probably is!

The winner’s curse is a phenomenon that occurs when buyers overpay for something that they won in an auction, a form of selection bias. Consider the following toy experiment: Say you have N people bidding for a product, the true value of the product is V, and each person estimates the value of the product with \(v \sim N(V, \sigma)\), where N is a normal distribution and \(\sigma\) is the standard deviation of the estimate. This means that the value estimations are unbiased (correct on average). The person who bids the highest wins. Assuming a second-price auction7, what is the expected value of the winning bid?

Let’s assume the item is worth \$1000, the standard deviation of the estimates is \$50, and that there are 10 bidders. In a second-price auction, you bet the expected value of the item. If you win, you only have to pay the second-highest bid. We can simulate that easily:

Code

# Winner's curse simulation

V = 1000 # Real value of the item

N = 10 # Number of bidders

sigma = 50 # Standard deviation of the bidders' valuations

overpayment = []

for _ in range(10_000): # Simulation samples

v = np.random.normal(V, sigma, N)

winning_bid = np.sort(v)[-2] # Winning bid pays second highest bid

overpayment.append(winning_bid - V)

mean_overpayment = np.mean(overpayment)

# Create the histogram

fig = go.Figure()

fig.add_trace(go.Histogram(

x=overpayment,

name='Overpayment',

marker=dict(color='blue'),

opacity=0.5,

))

# Add a vertical line for the mean

fig.add_shape(

go.layout.Shape(

type='line',

x0=mean_overpayment,

x1=mean_overpayment,

y0=0,

y1=1,

yref='paper',

line=dict(color='red', width=2, dash='dot')

)

)

# Add annotations and labels

fig.update_layout(

title="Winner's Curse: Histogram of Overpayment",

xaxis_title='Overpayment ($)',

yaxis_title='Frequency',

shapes=[dict(

x0=mean_overpayment,

x1=mean_overpayment,

y0=0,

y1=1,

yref='paper',

line=dict(color='red', width=2, dash='dot')

)],

annotations=[dict(

x=mean_overpayment,

y=0.9,

yref='paper',

showarrow=True,

arrowhead=7,

ax=0,

ay=-40,

text=f"Mean: {mean_overpayment:.2f}"

)]

)

fig.show()That is, the expected overpayment conditional on winning the auction is \$50, 5% of the actual value of the item! What is surprising is that the winner’s curse can exist even if there are no biases in the estimates of the item’s value.

In auctions, it’s very difficult to be rid of the winner’s curse, as it depends on not just your estimate of the item’s value but also everyone else’s. Plus, it also depends on whether it’s a first- or second-price auction. For more, read Richard Thaler’s intro on the topic.

From winner’s curse to winning strategy

For sports betting, consider the following: If your model prediction differs from the implied betting odds, which one is more likely to be wrong? Also, consider that whenever your model is wildly overconfident, that is when you will bet the most and most wrongly. That is a recipe for disaster, as even a perfectly calibrated and precise model on average could still lead to ruinous betting decisions if you only act when it’s wrong.

The only protection against the winner’s curse and selection bias is extensive validation: backtest your strategy and paper-trade before spending real money. The backtest will provide you with a list of bets you would have made in the past and paper-trading will allow you to validate your strategy and collect data in real time.

With such “counterfactual” betting data, you can ensure that your model and strategy work not just on expectation but also for the bets you actually would have made. That is, you must evaluate your model metrics, including calibration, on the matches you would have bet on. You can also use this data to calculate the ROI and risk metrics (e.g. Sharpe ratio and maximum drawdown) of your betting strategy in order to further validate your edge and determine its profitability and risk8.

Even Ed Thorp, when he had a clear and quantified edge by card counting on blackjack, paper-traded and started small until he fully embraced the Kelly criterion. He actually made some mistakes and lost money early on until he figured out the proper winning strategy. Then, he adopted the Kelly criterion and made huge sums until he was banned from and threatened by many casinos. He then moved on to beat the stock market and became a billionaire.

Backtesting and paper-trading are not enough. You also should have entry and exit plans to validate every piece of your strategy before you go all-in. That is, you should have a plan for when to start and stop betting and when to increase your bets that is not model-dependent. Simulation is essential but it can only go so far, as there are always unknown unknowns that will only be surfaced when you close the loop and bet for real.

Conclusion

In this post, I went over some concepts you need to know to use ML for e-sports betting. They apply more broadly than just CS:GO betting and might be useful for credit, fraud and marketing decisions, as I can attest from my own previous experiences in all of those fields. For example, I developed risk models for credit lines that impacted the bank’s credit exposure by dozens of millions of dollars using a profit-revenue-cost framework that was much more complex but similar in principle to what I describe in this post. I might write about that in the future in more details, so let me know if that interests you.

I didn’t cover some important topics for financial decision-making which might not be that relevant for betting but might be for other areas, the most glaring omission being causal inference. For more on that, read Causal Inference in Python, written by a former colleague who worked with me in credit.

For the next post, to be released soon, I will go over how we actually implemented our solution, including the features and betting odds data, the modeling, evaluation and metrics, and the backtesting showing our strategy had a positive ROI in theory. Also, I comment on why I failed to make money given the theoretical edge. Stay tuned!

Acknowledgements

This post would not exist without the ideas of Ramon de Oliveira, who worked with me on the project and did a lot of the implementation. I also thank Raphael Tamaki and Erich Alves for their feedback on the post.

Footnotes

For most businesses, what you really want to maximize is the net present value (NPV), which is the sum of cash flows over time, discounted back to their value in present terms. In other words, you care more about profits tomorrow than 10 years from now, but you just don’t want to ignore future profits. For simplicity, we will stick to present-day profits for now.↩︎

In the paper Beating the bookies with their own numbers - and how the online sports betting market is rigged, their profit-making betting strategy is essentially \(P > \frac{1}{Avg(O)+1} + \delta\), where \(Avg(O)\) is the average betting odds across multiple bookmakers. If a bookie deviates from the average, it’s likely they are making a mistake which then becomes your edge. They show this strategy works in theory and in practice until the bookmakers start limiting your bets (as always, it’s impossible to reliably beat the market).↩︎

That implies your utility for money is logarithmic, which is a reasonable assumption for most people. That is, if your wealth is \$100, you care a lot about winning or losing \$10. But if you are a millionaire, you would only start to worry about winning or losing hundreds of thousands of dollars. While not perfect, it’s a better assumption than linear utility, which would imply you care the same about winning or losing \$10 regardless of your wealth.↩︎

There are some exceptions, like playing blackjack with card counting, where you can have a good estimate of the probability of winning, which was the case when Ed Thorp beat the dealers in Las Vegas in the 60s. More broadly, one should beware the Ludic fallacy, which is the misuse of games to model real-life situations, explained by Taleb as “basing studies of chance on the narrow world of games and dice”.↩︎

The data-generating process is the probability distribution \(P(Y|X)\) that we are trying to model. In our case, \(Y\) is the probability of a team winning given their features \(X\). Stationarity means that \(P(Y|X)\) does not change over time. This is related but not the same as target shift (\(Y\) changes over time) and covariate shift (\(X\) changes over time), also possible complications but not discussed further.↩︎

Trust but verify: even if your model should be calibrated in principle, always make sure to assess its calibration empirically on the test set.↩︎

First-price auctions: the winner pays the amount they bid. Second-price auctions: the winner pays the amount the second-highest bidder bid. Second-price auctions are interesting because the optimal bid is the expected value of the product, so they are adopted in some situations e.g. Meta’s digital ad exchange.↩︎

Risk and return typically represent a trade-off, where higher returns are associated with higher risks. You should only accept a strategy with high risks if it has high returns, otherwise it’s better to leave your money on treasury bills or index funds. The Sharpe ratio is one metric that captures this trade-off, but it’s not useful in the “extremistan” of fat-tailed returns (e.g. returns of stocks or options). Read the Black Swan before considering investing, trading or betting.↩︎