Downloading free-7-million-company-dataset.zip to /notebooks

95%|██████████████████████████████████████ | 265M/278M [00:03<00:00, 93.1MB/s]

100%|████████████████████████████████████████| 278M/278M [00:03<00:00, 80.1MB/s]I explore the problem of name classification with ChatGPT and three machine learning models of increasing complexity: from logistic regression to FastAI LSTM to Hugging Face transformer. To see all the code and reproduce the results, check out the notebook.

Name classification

Can you classify a name as belonging to a person or company? Some are easy, like “Google” is a company and “Pedro Tabacof” is a name. Some are trickier, like “John Deere”. With a labelled dataset, we can train a machine learning model to classify names into entities. This is a simplification of the more general task called Named Entity Recognition. This can also be seen as a simple version of document classification, where the document is simply a name. Due to its simplicity and relation to typical NLP problems, name classification is a good candidate to experiment with different NLP technologies.

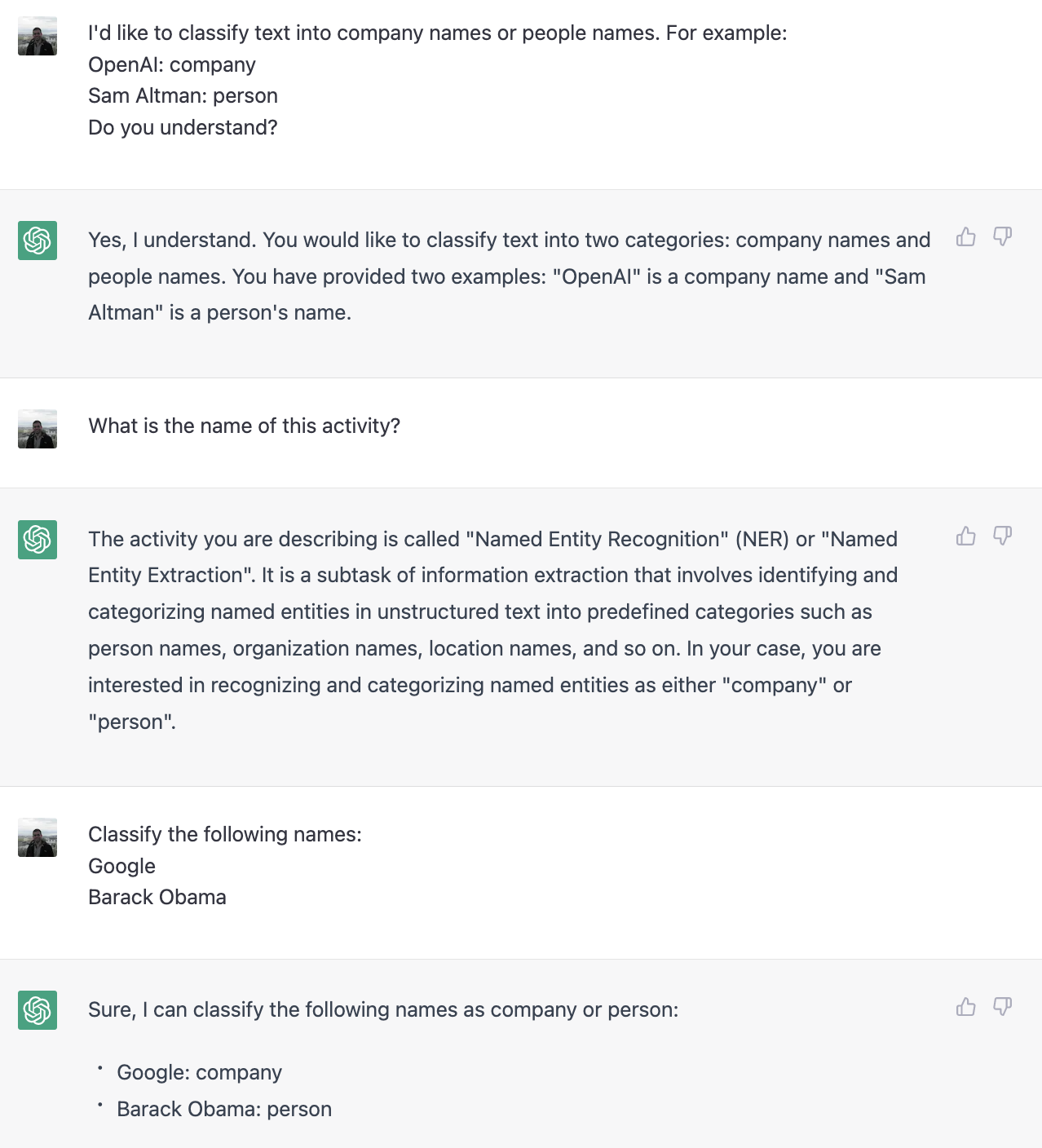

When I heard a friend was working on a name classification problem as part of a hiring process, I went straight to ChatGPT to look for answers. I soon realised that ChatGPT can do a great job itself classifying names into entities with just a couple of examples (one-shot learning):

Now, if I actually productionize that prompt using ChatGPT’s API, how would it compare to more traditional alternatives? In NLP, traditional might mean a model from just 5 years ago!

In this post, I explore four ways to classify names into person or company:

- Baseline using word counts and logistic regression: typical baseline for text classification

- FastAI LSTM fine-tuning (whole network): simple fine-tuning with few lines of code

- Huggingface DistilBERT fine-tuning (head only): more involved neural network training using PyTorch

- ChatGPT API one-shot learning: only prompt engineering and post-processing are needed

I use two public datasets available on Kaggle: IMDb Dataset for people names and 7+ Million Company Dataset for companies. Those datasets are large, with almost 20 million names! The choice of datasets was inspired by the open-source business individual classifier by Matthew Jones, which achieves 95% accuracy on this name classification task.

For simplicity, I sample 1M names for training and 100k for testing with a 50-50 balance between companies and people. Since we have balanced classes and ChatGPT cannot produce scores or probabilities (so we cannot use ROC AUC or average precision, definitely a big limitation of ChatGPT), I decided to use the accuracy as the main metric.

Datasets

First, I will download the datasets from Kaggle and do some basic preprocessing. To reproduce the results, you will need a Kaggle account and its command line installed locally. You need to add your API key and username to a file kaggle.json, which is found in the directory defined by the environment variable called KAGGLE_CONFIG_DIR.

Downloading imdb-dataset.zip to /notebooks

99%|██████████████████████████████████████▋| 1.05G/1.06G [00:06<00:00, 153MB/s]

100%|███████████████████████████████████████| 1.06G/1.06G [00:06<00:00, 163MB/s]Archive: free-7-million-company-dataset.zip

inflating: companies_sorted.csv Archive: imdb-dataset.zip

inflating: data.tsv I do some preprocessing, inspired by the open-source repo I got the datasets inspiration from: 1. Lower case the people dataset since the companies dataset is all lower case (otherwise I’d suggest keeping the original case, as that can be informative). 2. Remove odd characters and unnecessary spaces. 3. Remove empty and null rows.

companies = pd.read_csv("companies_sorted.csv", usecols=["name"])

people = (

pd.read_csv("data.tsv", sep="\t", usecols=["primaryName"])

# Since the companies are all lower case, we do the same here to be fair

.assign(name=lambda df: df.primaryName.str.lower()).drop("primaryName", axis=1)

)

df = pd.concat(

(companies.assign(label="company"), people.assign(label="person"))

).sample(frac=1.0, random_state=42)

invalid_letters_pattern = r"""[^a-z0-9\s\'\-\.\&]"""

multiple_spaces_pattern = r"""\s+"""

df["clean_name"] = (

df.name.str.lower()

.str.replace(invalid_letters_pattern, " ", regex=True)

.str.replace(multiple_spaces_pattern, " ", regex=True)

.str.strip()

)

df = df[

~df.clean_name.isin(["", "nan", "null"]) & ~df.clean_name.isna() & ~df.label.isna()

][["clean_name", "label"]]

df.head(10)| name | label | |

|---|---|---|

| 10103038 | jinjin wang | person |

| 5566324 | native waterscapes, inc. | company |

| 8387911 | jeff killian | person |

| 6783284 | lisa mareck | person |

| 9824680 | pablo sánchez | person |

| 6051614 | dvc sales | company |

| 6479728 | orso balla | person |

| 4014268 | two by three media | company |

| 2093936 | house of light and design | company |

| 11914237 | hamdy faried | person |

From the value counts below, we can see that we have 19.5 million names, 63% being people and 37% companies.

df.label.value_counts()person 12344506

company 7173422

Name: label, dtype: int64I sample 550k people and companies to make the dataset balanced and then split into 1M training and 100k testing examples.

train_df = pd.concat(

(

df[df.label == "company"].sample(n=1_100_000 // 2),

df[df.label == "person"].sample(n=1_100_000 // 2),

)

)

train_df, test_df = train_test_split(train_df, test_size=100_000, random_state=42)I save the processed datasets for easier iteration. Tip: If you have large datasets, always try to save your preprocessed datasets to disk to prevent wasted computation.

# Saving the processed dataframes locally for quicker iterations

train_df.to_csv("train_df.csv", index=False)

test_df.to_csv("test_df.csv", index=False)

# Freeing up the memory used by the dataframes

del companies, people, df, train_df, test_df

gc.collect()Since I freed up the memory of all datasets, I need to reload them:

# Just run from here if the datasets already exist locally

train_df = pd.read_csv("train_df.csv")

test_df = pd.read_csv("test_df.csv")

train_df.shape, test_df.shape((1000000, 2), (100000, 2))Now, I have one single dataset for training with 500k people and 500k companies and one single test set with 50k people and 50k companies.

Exploratory data analysis

Before I actually get to the fun part, let’s understand the data we have first. I have two hypotheses to explore:

- Do we see a different distribution of words per class? I’d expect some words like “ltd” to be present only in companies and words like “john” to be over-represented in names.

- Does sentence length vary by class? I expect higher range for companies than people, as companies can be from just two characters like “EY” to mouthfuls like “National Railroad Passenger Corporation, Amtrak”. Alternatively, I could look at the number of words per sentence, since most Western names are around 3 words.

Anyway, beware the Falsehoods Programmers Believe About Names.

words_df = (

train_df.assign(word=train_df.clean_name.str.split(" +"))

.explode("word")

.groupby(["word", "label"])

.agg(count=("clean_name", "count"))

.reset_index()

)

total_words = words_df["count"].sum()

words_df = words_df.assign(freq=words_df["count"]/total_words)

person_words = (

words_df[words_df.label == "person"].sort_values("freq", ascending=False).head(25)

)

company_words = (

words_df[words_df.label == "company"].sort_values("freq", ascending=False).head(25)

)First, let’s take a look at the word counts by label:

We can see our hypothesis was right: Some words are quite predictive of being a person or company name. Note that there is no intersection between the top 25 words for people and companies. This insight implies a simple but effective baseline would be a model built on top of word count, which is what I do next. However, there is a long tail of possible names, so we have to go beyond the most common ones. Another way to see how the distributions differ is by sentence length:

Company names tend to be longer on average and have a higher variance, but interestingly they both peak at 13 characters. I could use sentence length as a feature, but let’s stick to word counts for now.

Baseline: Word counts + Logistic regression

Let’s start with a simple and traditional NLP baseline: word frequency and logistic regression. Alternatively, we could use Naive Bayes, but I prefer logistic regression for its greater generality and easier interpretation as a linear model.

Typically, we use TF-IDF instead of word counting for document classification. Since names are quite short and repetitive words (e.g. “John”) are predictive, I believe it not to be useful here. Indeed, a quick test showed no improvement to accuracy by using TF-IDF.

Another varation is to use n-grams for either words or characters: they’re left as a suggestion to the reader.

text_transformer = CountVectorizer(analyzer="word", max_features=10000)

X_train = text_transformer.fit_transform(train_df["clean_name"])

X_test = text_transformer.transform(test_df["clean_name"])

logreg = LogisticRegression(C=0.1, max_iter=1000).fit(

X_train, train_df.label == "person"

)

preds = logreg.predict(X_test)

baseline_accuracy = accuracy_score(test_df.label == "person", preds)

print(f"Baseline accuracy is {round(100*baseline_accuracy, 2)}%")Baseline accuracy is 89.49%89.5% accuracy is not bad for a linear model! Remember, since the datasets are balanced, a baseline accuracy without any information would be 50%. Now, whether this is good or bad in an absolute sense, it depends on the actual application of the model. It also depends on the distribution of the words this model would actually see in production. The datasets I used are quite general, containing all kinds of people and company names. In a real application, the names could be more constrained (e.g. only coming from a particular country).

Now, let’s see what mistakes the model makes (error analysis). It’s always interesting to look at examples where the model makes the worst mistakes. If we have a tabular dataset, it might be difficult to interpret what is going on, but, for perceptual data a human can understand (text, image, sound), this leads to invaluable insights into the model.

test_df["proba_person"] = logreg.predict_proba(X_test)[:, 1]

test_df["abs_error"] = np.where(

test_df.label == "person", 1 - test_df.proba_person, test_df.proba_person

)

test_df.sort_values("abs_error", ascending=False)[

["clean_name", "label", "proba_person"]

].head(10)| clean_name | label | proba_person | |

|---|---|---|---|

| 60581 | co co mangina | person | 0.000206 |

| 49398 | buster benton and the sons of blues | person | 0.000984 |

| 6192 | best horizon consulting | person | 0.001613 |

| 83883 | les enfants du centre de loisirs de chevreuse | person | 0.002633 |

| 84646 | manuel antonio nieto castro | company | 0.997350 |

| 32669 | chris joseph | company | 0.996298 |

| 8545 | hub kapp and the wheels | person | 0.004568 |

| 77512 | michael simon p.a. | company | 0.994109 |

| 71392 | dylan ryan teleservices | company | 0.993017 |

| 64777 | netherlands national field hockey team | person | 0.007220 |

We can see that the mistakes are mostly understandable: There are many companies named just like people. How could the model know Chris Joseph is a company and not a person? The only way would be with information not available in the data I provided for its learning. We also see mislabelings in the people dataset: “netherlands national field hockey team” and “best horizon consulting” do not sound like people names!

This implies a high-leverage activity here would be cleaning the people dataset. If you want to make the data cleaning process sound sexier, just call it data-centric AI (just kidding: data-centric AI is actually a good framework to use for real-life machine learning applications where, in almost all cases, data trumps modelling).

FastAI LSTM fine tuning

For the first more complex machine learning model, let’s start with FastAI due to is simple interface. Following the suggestion of this article, I used an AWD_LSTM model which was pre-trained as a language model that predicts the next word using Wikipedia as dataset. Then, I fine-tune the model with our classification problem. FastAI fine-tune works in the following way: in the first epoch, it only trains the head (the newly inserted neural network on top of the pre-trained language model), then, for all subsequent epochs, it trains the whole model together. FastAI uses many tricks to make the training more effective, which is all wrapped in a simple function call. While convenient, it makes understanding what is going on behind the scenes and any customization more difficult.

fastai_df = pd.concat((train_df.assign(valid=False), test_df.assign(valid=True)))

dls = TextDataLoaders.from_df(

fastai_df, text_col="clean_name", label_col="label", valid_col="valid"

)

learn = text_classifier_learner(dls, AWD_LSTM, drop_mult=0.5, metrics=accuracy)learn.fine_tune(5, 1e-2)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.513145 | 0.397019 | 0.802810 | 03:37 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.167889 | 0.137422 | 0.952030 | 07:53 |

| 1 | 0.157485 | 0.145000 | 0.956200 | 07:50 |

| 2 | 0.122625 | 0.139295 | 0.963160 | 07:53 |

| 3 | 0.112604 | 0.112886 | 0.968730 | 07:53 |

| 4 | 0.111916 | 0.111421 | 0.970460 | 07:53 |

Now we ended with 97.1% accuracy, almost 8 percentage points higher than our baseline! Not bad for a few lines of code and one hour of GPU time. Can we do better? Let’s try using a 🤗 transformer.

Hugging Face DistilBERT classification head training

Hugging Face offers hundreds of possible deep learning models for inference and fine-tuning. I chose DistilBERT due to time and GPU memory constraints. By default, Hugging Face trainer will fine-tune all the weights of the model, but now I just want to train the classification head, which is a two-layer fully-connected neural network (aka MLP). The reason is twofold: 1. We’re dealing with a simple problem and 2. I don’t want to leave the model training for too long to make reproducibility simpler and reduce GPU costs. I worked backwards from the previous results: Since FastAI took roughly one hour, I wanted to use the same GPU time budget here.

To only train the classification head, I had to use the PyTorch interface, which allows for more flexibility. First, I download DistilBERTs tokenizer, apply it to our dataset, then download the model itself, mark all layers as requiring no gradient (i.e. not trainable), and then train the classification head.

batch_size = 32

num_epochs = 3

learning_rate = 3e-5tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")First, I apply the DistilBERT tokenizer to our datasets:

tokenized_train_df = tokenizer(

text=train_df["clean_name"].tolist(), padding=True, truncation=True

)

tokenized_test_df = tokenizer(

text=test_df["clean_name"].tolist(), padding=True, truncation=True

)Now, I create a PyTorch dataset that is the able to hand the input format given by the tokenizer (tokens + attention mask), alongside the labels:

train_dataset = NamesDataset(

tokenized_train_df, (train_df.label == "person").astype(int)

)

test_dataset = NamesDataset(tokenized_test_df, (test_df.label == "person").astype(int))train_dataloader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataloader = DataLoader(test_dataset, batch_size=batch_size)model = AutoModelForSequenceClassification.from_pretrained(

"distilbert-base-uncased", num_labels=2

)I set all the base model parameters as non-trainable (so that only the classification head is trained):

for param in model.distilbert.parameters():

param.requires_grad = FalseFinally, I actually train the model (see full training and evaluation code in the notebook):

epoch 0: test accuracy is 96.527%

epoch 1: test accuracy is 96.776%

epoch 2: test accuracy is 96.854%We got 96.8% accuracy, essentially the same as FastAI LSTM model. This implies the extra complexity here was for nought. Of course, this problem is a simple one: If I had a more complex problem, I’m sure using a stronger pre-trained language model would give an edge relative to the simpler LSTM trained on Wikipedia. Also, by not fine-tuning the whole network, we miss out on the full power of the transformer. But this suggests that you shouldn’t write off FastAI without trying, which, as I show above, is quite simple.

Let’s see which mistakes this model is making:

test_df["proba_person"] = test_preds

test_df["abs_error"] = np.where(

test_df.label == "person", 1 - test_df.proba_person, test_df.proba_person

)

test_df.sort_values("abs_error", ascending=False)[

["clean_name", "label", "proba_person"]

].head(10)| clean_name | label | proba_person | |

|---|---|---|---|

| 6192 | best horizon consulting | person | 0.000008 |

| 47006 | rolf schneebiegl & seine original schwarzwald-musi | person | 0.000326 |

| 58512 | development | person | 0.000363 |

| 9404 | xin yuan yao | company | 0.999556 |

| 59585 | cheng hsong | company | 0.999490 |

| 46757 | compagnie lyonnaise du cin ma | person | 0.000550 |

| 38224 | pawel siwczak | company | 0.999389 |

| 25983 | sarah hussain | company | 0.999311 |

| 23870 | manjeet singh | company | 0.999295 |

| 73909 | glassworks | person | 0.000776 |

Again, we see cases of clear mislabeling in the case of person and some tough cases in the case of company. Given the accuracy and the worst mistakes, we may be at the limit of what can be done for this dataset without cleaning it. Now, the final question: Can I get the same level of accuracy without any supervised training at all?

ChatGPT API one-shot learning

I will use OpenAI’s API to ask ChatGPT to do name classification for us. First, I need to define the prompt very carefully, what is now called prompt engineering. There are some rules of thumb for prompt engineering. For example, always give concrete examples before asking ChatGPT to generalize to new ones.

The ChatGPT API has three prompt types:

- System: Helps set the tone of the conversation and gives overall directions

- User: Represents yourself, use it to state your task or need

- Assistant: Represents ChatGPT, use it to give examples of valid or reasonable responses

You can mix and match all prompt types, but I suggest starting with the system one, having at least one round of task-response examples, then restating the task that will actually be completed by ChatGPT.

Here, I ask for ChatGPT to classify 10 names into person or company. If I ask for more, say 100 names, there is a higher chance of failure (e.g. it sees a weird string and complains there is nothing it can do regarding the whole batch). If there is still a failure, I do a backup query on each name individually. If ChatGPT fails to provide a clear answer on an individual name, I default to answering “company” since this class contains more problematic strings.

Finally, how can I extract the labels from ChatGPT’s response? It might answer differently, for example, by fixing a misspelling or by using uppercase instead of lowercase (system prompt notwithstanding). In general, it answers in the same order, but can I rely on that completely for all 100k examples? To be safe, I do a simple string matching based on the Levenshtein distance to match the names I query with ChatGPT’s responses.

To reproduce the code below, you need to have an OpenAI account and OPENAI_API_KEY set in your environment

system_prompt = """

You are a named entity recognition expert.

You only answer in lowercase.

You only classify names as "company" or "person".

"""

task_prompt = "Classify the following names into company or person:"

examples_prompt = """google: company

john smith: person

openai: company

pedro tabacof: person"""

base_prompt = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": task_prompt},

{"role": "assistant", "content": examples_prompt},

{"role": "user", "content": task_prompt},

]

all_preds = []

def get_chatgpt_preds(batch_df):

""" Gets predictions for a whole batch of names using ChatGPT's API"""

prompt = base_prompt.copy()

prompt += [{"role": "user", "content": "\n".join(batch_df.clean_name)}]

openai.api_key = os.getenv("OPENAI_API_KEY")

try:

# Max tokens as 20000 is enough in practice for 10 names plus the prompt

# Temperature is set to 0 to reduce ChatGPT's "creativity"

# Model `gpt-3.5-turbo` is the latest ChatGPT model, which is 10x cheaper than GPT3

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo", messages=prompt, max_tokens=2000, temperature=0

)

# Since we gave examples as "name: class", ChatGPT almost always follows this pattern in its answers

text_result = response["choices"][0]["message"]["content"]

clean_text = [

line.lower().split(":") for line in text_result.split("\n") if ":" in line

]

# Fallback query: if I cannot find enough names on the response, I ask for each name separately

# Without it, we'd have parsing failures once every 10 or 20 batches

if len(clean_text) < len(batch_df):

clean_text = []

for _, row in batch_df.iterrows():

prompt = base_prompt.copy()

prompt += [{"role": "user", "content": row.clean_name}]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo", messages=prompt, max_tokens=2000, temperature=0

)

row_response = response["choices"][0]["message"]["content"]

if ":" in row_response:

clean_text.append(

[row_response.split(":")[0], row_response.split(":")[-1]]

)

else:

clean_text.append([row.clean_name, "company"]) # defaults to company

# To ensure I'm matching the query and the corresponding answers correctly,

# I find the closest sentences in the Levenshtein distance sense

batch_df = batch_df.copy()

batch_df = batch_df.merge(pd.DataFrame({"resp": clean_text}), how="cross")

batch_df["resp_name"] = batch_df.resp.str[0].str.strip()

batch_df["resp_pred"] = batch_df.resp.str[-1].str.strip()

batch_df["dist"] = batch_df.apply(

lambda row: lev.distance(row.clean_name, row.resp_name), axis=1

)

batch_df["rank"] = batch_df.groupby("clean_name")["dist"].rank(

method="first", ascending=True

)

batch_df = batch_df.query("rank==1.0")[["clean_name", "label", "resp_pred"]]

# Catches all errors

# Errors only arise due to failures from OpenAI API and should be quite rare

# Ideally, we should keep retrying with exponential backoff

except Exception as e:

print("Exception:", str(e))

batch_df = batch_df.copy()

batch_df["resp_pred"] = "company" # defaults to company

return batch_dfchatgpt_num_workers = 32

chatgpt_batch_size = 10

split_size = len(test_df) // chatgpt_batch_size

test_batches = np.array_split(test_df, split_size)

chatgpt_preds = Parallel(n_jobs=chatgpt_num_workers, verbose=5)(

delayed(get_chatgpt_preds)(batch_df) for batch_df in test_batches

)chatgpt_preds = pd.concat(chatgpt_preds)

chatgpt_accuracy = (chatgpt_preds.resp_pred == chatgpt_preds.label).sum() / len(

chatgpt_preds

)

print(f"ChatGPT accuracy is {round(100*chatgpt_accuracy, 2)}%")ChatGPT accuracy is 97.52%Incredible! With 97.5% accuracy, ChatGPT managed to outperform complex neural networks trained for this specific task. One explanation is that it used its knowledge of the world to understand some corner cases that the models could not have possibly learned from the training set alone. In some sense, this would be a form of “leakage”: perhaps ChatGPT would be weaker classifying companies founded after its cutoff date (2021).

ChatGPT is also quite cheap to run: the cost to classify the 100k examples was just under $5. It took 18 min to score all the examples, which could probably be improved by better parallelism. If you don’t use any parallelism at all, it will be much slower.

Let’s see the raw responses ChatGPT gives:

chatgpt_preds.resp_pred.value_counts().head(20)person 50955

company 48913

company or person (not enough context to determine) 13

it is not clear whether it is a company or a person. 11

neither (not a name) 7

not a name 6

it is not clear whether this is a company or a person. 5

cannot be classified as either company or person 4

company or person (not enough information to determine) 4

i'm sorry, i cannot classify this name as it does not appear to be a valid name. 3

neither 3

it is not clear whether it is a person or a company. 2

n/a (not a name) 2

i am sorry, i cannot classify this name as it does not provide enough information to determine if it is a company or a person. 2

this is not a name. 2

this is not a valid name. 2

person (assuming it's a misspelling of a person's name) 2

neither person nor company (not a name) 2

person or company (without more context it is difficult to determine) 2

place 2

Name: resp_pred, dtype: int64For the vast majority of cases, ChatGPT answers as I request: person or company. In very rare cases, it states it doesn’t know, it’s not clear or could be both. What are such examples in practice?

chatgpt_preds[~chatgpt_preds.resp_pred.isin(["person", "company"])][["clean_name", "label", "resp_pred"]].head(10)| clean_name | label | resp_pred | |

|---|---|---|---|

| 55 | alkj rskolen ringk bing | company | neither (not a valid name) |

| 55 | 81355 | person | cannot be classified without more context |

| 22 | telepathic teddy bear | person | neither |

| 11 | agebim | company | it is not clear whether it is a company or a person. |

| 44 | i quit smoking | company | neither company nor person |

| 33 | saint peters church | company | company (assuming it's a church organization) |

| 88 | holy trinity lutheran church akron oh | company | company (assuming it's a church organization) |

| 55 | displayname | company | company or person (not enough context to determine) |

| 66 | ken katzen fine art | company | company or person (not enough context to determine) |

| 66 | columbus high school | company | company (assuming it's a school) |

The names ChatGPT cannot classify are definitely tricky, like “81355” or “telepathic teddy bear”. In some cases, like for “saint peters church”, it does get it right with some extra commentary in parenthesis. All in all, I’d say ChatGPT did an amazing job and failed in a very human way.

Conclusion

I have explored 4 ways to classify names: from a simple logistic regression to a complex neural network transformer. In the end, a general API from ChatGPT outperformed them all without any proper supervised learning.

| Method | Accuracy (%) |

|---|---|

| Baseline | 89.5 |

| Benchmark | 95 |

| FastAI | 97.1 |

| Hugging Face | 96.9 |

| ChatGPT | 97.5 |

There is a lot of hype around LLMs and ChatGPT, but I’d say it does deserve the attention it’s getting. Those models are transforming tasks that required deep machine learning knowledge into software + prompt engineering problems. As a data scientist, I’m not worried about those models taking over my job, as predictive modelling is only a small aspect of what a data scientist does. For more thoughts on this, check out The Hierarchy of Machine Learning Needs.

Acknowledgements

I’d like to thank Erich Alves for some of the ideas explored in this post. I also thank Erich and Raphael Tamaki for reviewing the post and giving feedback.